January 24, 2019

Should You A/B Test?

First of all, what does A/B testing mean?

A/B testing starts when you want to be sure you’re making the right decision.

Simply put, A/B testing is comparing the effectiveness of two (or more) different variants of the same design, by launching them under similar circumstances.

These A/B tests should be driven by a cause and the objective to perform the test should be very clear because the problem that prompted the decision of conducting a testing experiment in the first place becomes the hypothesis.

The objective

Setting your objective speaks about whether you want to optimize a current state to see what is the best way to do an activity, or validate an idea to see which activity is the best.

A/B testing evolves precisely with the problem that you want to fix and if there is a metric that you want to improve or you see a certain metric that is not performing as you hoped. These analytics helps make better product decisions that can have a huge impact on your user’s experience. It can be as simple as comparing two different colors for a CTA button for an online store or finding just the right place for a new feature.

For example, I recently noticed that Instagram was running an A/B test on their interface, around where saved photos are stored and where should that ”Saved” section be. They had 3 different variants and I could see each one of them on all of my accounts and that was really interesting to observe as a UX designer. I assume that they decided on the winner of those 3 variants because I can only see one version now, but I believe that their target was to make sure they keep a clean interface and at the same time add new features without disrupting the usual activity. Keep us scrolling without getting frustrated that things are changing. Each change that a user is facing should go through A/B testing, to make sure it fits business goals.

Reasons why A/B tests fail

Starting with the wrong hypothesis.

Now, hypothesis basically means answering a simple question:

What (should happen) if?

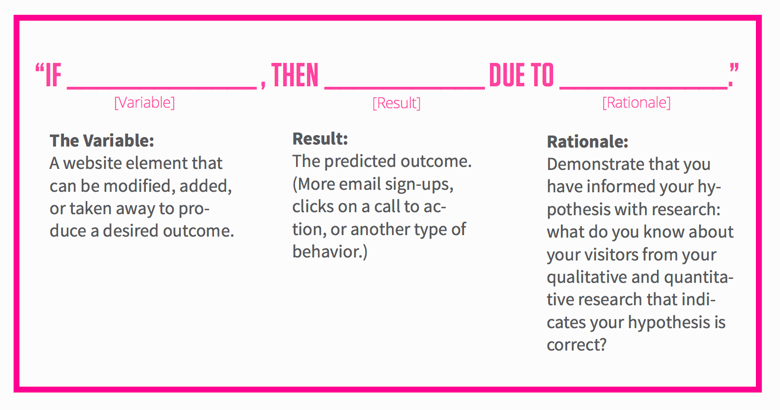

This is a prediction that you create before running the testing experiment. It states clearly what is being changed, what you believe the outcome will be and why you think that’s the case. Running the experiment will either prove or disprove your hypothesis.

Here’s a hypothesis formula from Optimizely:

Not taking statistical significance into consideration.

Optimizely explains it this way:

Statistical significance is important because it gives you confidence that the changes you make to your website or app actually have a positive impact on your conversion rate and other metrics. Your metrics and numbers can fluctuate wildly from day to day, and statistical analysis provides a sound mathematical foundation for making business decisions and eliminating false positives.

Solving too many issues at once.

Changing too many things at the same time makes difficult to know which change influenced the test. That’s why you need to test components one after another so results won’t get conflicted. You need to prioritize and solve the most critical one first.

Things that can improve your A/B testing results

The Timeline:

Testing needs to spread on a sufficient amount of time in order to collect valuable results. A short amount of time might get products falling in the early imaginary wins trap. There’s no need to set up a specific number of min. or max. users to engage with your test to declare a winner variant, but make sure the time period is long enough for you to see numbers you can trust.

The Prediction API:

Predictive APIs help improve the app experience by learning the user’s capability to perform inside the app and predicting if the user is more likely to stay or leave the app due to certain steps they might need to make. Sometimes only users who make certain steps are exposed to the test, so hints are a good way of guiding the user towards the ”tested territory” inside your app.

The A/A Test:

A/A testing means using A/B testing to test two identical versions against each other. Typically, this is done to check that the tool being used to run the experiment is statistically fair. In an A/A test, the tool should report no difference in conversions between the control and variations and it helps to make sure you distribute the test to all the users.

So, should you A/B test?

Testing experiments help make better product decisions, so A/B testing can be seen as guidance that backs you up with data. Just make sure that if you improve a metric, there are no other metrics impacted negatively.

I want to mention two awesome collaborators to the A/B knowledge shared here: Aakash Varshney and Bhakti Sudha Naithani. ?

This post was originally published by Iulia on Medium here.

The Revenue Cycle Playbook:

The Revenue Cycle Playbook: