Healthcare IT Today Podcast: 4 Opportunities to Ease the Tension Between Payers & Providers

When it comes to providers and payers, there’s no avoiding the tension that exists between the two. Ultimately, one’s revenue is the other’s costs. There’s also the fact that providers and payers are optimizing for different things. Providers want to ensure patients get the best care. Individual clinicians are incentivized to provide more care at the individual level to serve the patient and avoid malpractice suits; at the institutional level, more procedures mean more revenue, typically. Payers, on the other hand, face their own competitive dynamics as they sell to employers and individuals who want low premiums above all else.

You might be surprised to learn that despite the inevitably of this tension, there also lies plenty of opportunity in the space between providers and payers. In a recent episode on Healthcare IT Today Interviews, Steve Rowe, Healthcare Industry Lead at 3Pillar, and host John Lynn discuss why this tension exists and what can be done about it. We’ve captured the four biggest opportunities below.

1. RCM and Claims

The first opportunity is around Revenue Cycle Management (RCM) and claims. Payers have all sorts of different rules around what they will approve and what they’ll deny to balance the tension between keeping premiums low and paying for medical coverage. These rules are sometimes even group-specific.

The challenge? Providers don’t know what those rules are, which creates difficulties for the member. It’s not easy to understand at the moment what will be approved and what will be denied. That means patients may end up unhappy when a proposed treatment is denied or not paid in full (and they are balanced billed).

The opportunity here is for payers to expose that logic to health systems—essentially preadjucating payment (instead of doing it after the fact). The business rationale: make it easy for in-network providers to get paid in exchange for more competitive rates. Some companies are already doing this: “Glen Tullman is doing it with Transcarent; he’s essentially trying to intermediate the payer to create a new network. His whole premise to providers is, ‘Join our network because we will pay you the same day you do service,’” notes Steve. “That’s how he’s building his network with the top healthsystem and doctors.”

2. RCM Complexity

In Steve’s experience building an RCM startup and working with a regional Urgent Care chain, he observed that the expertise and institutional knowledge around claims processing was largely in the heads of the medical billing coders.

There are two main forms of complexity in RCM he highlights:

- Submitting the correct eligibility information (e.g. specific formatting of member ID numbers)

- Matching the right diagnostic codes to the appropriate CPT codes, which can be a large and complex matrix.

The risk here is that this institutional knowledge will be lost when these experienced medical billers retire. The processes are very manual, with reimbursements not keeping up with labor inflation. 3Pillar is leveraging AI and data mining to reverse engineer each payer’s algorithm for approvals and denials. The goal is to systematize this knowledge and flag issues proactively, rather than relying on the institutional knowledge of the billing staff.

The vision is to integrate this RCM intelligence engine with clinical documentation tools. That way providers are alerted in real-time during the care planning process about treatments or codes that are likely to be denied by the payer. This will improve the financial experience for providers and patients alike.

3. The Need for Data Transformation

There is a significant opportunity for data transformation as regional payers have data that lives in separate systems that don’t talk to each other. The pipes to connect these systems haven’t been built and the data isn’t defined in the same way. Regional payers are often at a technological disadvantage compared to national payers because they still have on-premise servers and haven’t moved to the cloud. The IT departments for these payers are swamped putting out fires. They simply don’t have the resources to take on the work associated with major technology modernization projects.

And here’s the rub: Self-insured employers want highly customized insurance products and plans that require flexible and configurable technology platforms. National payers have invested in modern tech stacks that can support this level of customization. However, regional payers struggle to match this same capability.

So, there’s a real need for regional payers to create a unified data platform and operating system that can integrate data from various systems (e.g., claims, population health, PBM, etc.). This would result in a simplified member experience while enabling seamless workflows for call center representatives, who often have to navigate multiple disparate systems. This is an area where working with a partner who specializes in this capability would be beneficial.

4. Real-Time Answers to Member Questions

Speaking of member experience, it’s now the number one concern of Vice Presidents of Benefits at self-insured employers thanks to a tight labor market. Top-tier benefits are necessary to attract and retain talent. There’s no doubt that there’s plenty of room to improve. The experience is often fragmented and frustrating as members struggle to get accurate information about coverage, costs, and provider networks.

There’s an opportunity for payers to make their medical policies and coverage algorithms more transparent and accessible to members at the point of care. Steve explains, “I’m excited about this opportunity because we’ve all been there where it’s like, ‘I just want to know if this particular provider for urgent care who is still open at 10 p.m. is in network. I can’t figure that out on the app. There’s not a search function and the call line doesn’t open until 8 a.m. tomorrow.”

What if patients could get real-time answers to their questions? 3Pillar is making that vision a reality through chatbots powered by AI and knowledge graphs. By using AI to combine data from disparate systems, members can get accurate, up-to-date information at any time, from anywhere.

These chatbots could also help to address the challenge of call center representatives needing to navigate multiple systems to piece together an answer for a member. Steve points out one key consideration: ensuring the chatbots are fed accurate data and avoiding hallucinations. Doing so requires careful design and integration with the underlying data sources.

While none of these opportunities have “easy buttons” to press, they all provide means for payers to differentiate themselves and better serve patients and providers. You can discover even more areas for payers and providers to win in the full podcast episode.

Recent posts

Building an AI-Enabled SDLC: Insights from 3Pillar and Forrester

As AI reshapes how software gets built, many organizations are asking the same question: where do we start—and how do we make it real?

That was the focus of a recent joint webinar hosted by 3Pillar and Forrester, titled AI & the SDLC: Beyond the Hype to a Practical Roadmap. In a candid discussion, Lance Mohring and Scott Young, Field CTOs at 3Pillar, joined special guest Devin Dickerson, Principal Analyst at Forrester, to explore what true AI-enabled velocity looks like – and how enterprises can prepare for it.

The conversation surfaced a clear message: success in the AI-driven SDLC isn’t about chasing tools. It’s about understanding maturity, orchestrating change, and building the right foundation for long-term impact.

The AI-enabled SDLC maturity model

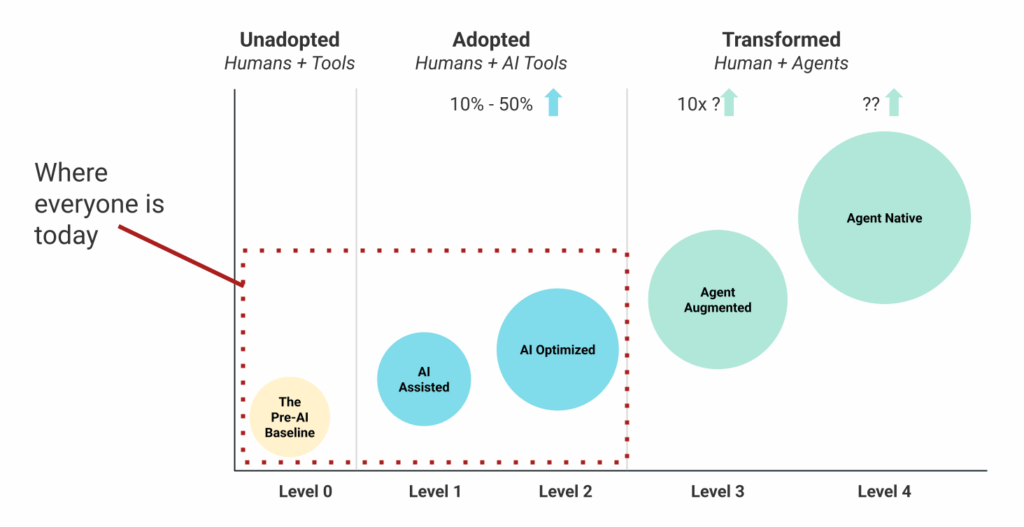

To ground the discussion, 3Pillar introduced its AI-enabled SDLC maturity model – a framework designed to help technology and product leaders assess where they stand today and define the next step forward.

The model maps the evolution from today’s fragmented experimentation to tomorrow’s orchestrated, AI-native development environments:

- Level 0 – Classic SDLC: Traditional agile, kanban, and waterfall practices. Stable and familiar, supported by a mature tool set, but largely manual.

- Level 1 – AI-Assisted: Individual practitioners begin experimenting with tools like code assistants, design generators, or test automation. Progress is real but inconsistent.

- Level 2 – AI-Optimized: The organization formalizes adoption; governance, tool selection, and integration create measurable efficiency gains across the lifecycle.

- Level 3 – Agent-Augmented: Planning agents begin managing end-to-end workflows, coordinating across roles and tools. Humans move “over the loop,” providing direction and validation. Scrum is supplanted as the dominant work management method.

- Level 4 – Agent-Native: Teams operate in fully AI-orchestrated environments where human expertise focuses on strategy, design, and value creation. The process is optimized for AI, with the humans focusing on inputs and outputs

“We built this to give leaders a way to visualize progress – to see not just what’s possible, but what’s practical today.” – Scott Young

Where most organizations are today

While the framework spans five levels, most organizations remain early in the journey. Dickerson observed that maturity is uneven by nature.

“You might have a few developers running sophisticated agent workflows while others on the same team don’t see the value yet. AI maturity isn’t linear—it’s fragmented.” – Devin Dickerson

In other words, enterprise adoption today looks more like a patchwork than a transformation. Designers may experiment with AI for ideation; developers may use copilots for small code snippets. But without alignment, these efforts plateau quickly.

Young illustrated the point through a real example: after using an LLM to prototype a React app, he handed it off to a designer – who had no idea how to integrate it into Figma. “We’re getting velocity at the individual level,” he noted, “but we’re not scaling collaboration.”

This is the defining marker of Level 2: impressive local gains that don’t yet translate into systemic value.

The “faster horse” moment

To explain this dynamic, Young used a powerful metaphor. In the early 1900s, when Henry Ford asked people what they wanted, they said “a faster horse.” What they really needed was a car.

Many organizations are in a similar moment today – seeking incremental speed in legacy processes rather than reimagining the system itself. They’re improving story writing, test automation, or code generation, but still within the same SDLC architecture.

“The real shift happens when you stop thinking about tools and start thinking about orchestration.” – Lance Mohring

What true AI velocity looks like

When orchestration replaces isolated experimentation, the returns multiply. Moving from AI-assisted to agent-augmented workflows can unlock 10× efficiency gains in focused use cases.

At this stage, humans shift from “in the loop” to “over the loop.” Instead of manually prompting each task, they oversee a network of agents performing coordinated roles – architect, tester, product manager, developer – each operating semi-autonomously.

This new structure changes more than output speed. It collapses the traditional seams of the SDLC, accelerates feedback loops, and forces organizations to rethink concepts like backlogs, sprints, and even technical debt.

“Technical debt may become less relevant. The AI doesn’t care that your code isn’t elegant—it just makes the change everywhere instantly.” – Scott Young

But acceleration without governance can magnify risk. Trust, quality, and validation processes must evolve just as quickly. “Did we build the right thing?” remains the most important question.

From task agents to planning agents

In the next phase of maturity, the SDLC becomes less about humans instructing narrow task agents and more about planning agents coordinating entire workflows.

A task agent might add a new field to a form; an planning agent could translate a product requirement document into a full sprint backlog, direct subordinate agents to execute each story, and verify completion – all under human supervision.

“We’re moving from delegating tasks to delegating responsibility—while humans retain accountability.” – Devin Dickerson

This evolution redefines roles. Product managers, architects, and designers become system conductors rather than task executors. The orchestration layer becomes the new frontier of competitive advantage.

Turning insight into action

The speakers closed with four practical recommendations for leaders beginning this journey:

- Start now. Experiment with code-generation use cases to build understanding of what AI can – and can’t – do.

- Prioritize proficiency. Invest in context engineering and prompt literacy. The real gains come from knowing how to guide AI effectively.

- Identify high-impact use cases. Focus on choke points – testing, QA, or backlog refinement – where automation drives visible results without high risk.

- Keep people at the center. Balance governance with creativity. Reinvest productivity gains into upskilling your teams and nurturing cross-functional collaboration.

“AI in the SDLC isn’t a single project – it’s an organizational transformation that demands structure, experimentation, and empathy in equal measure.”

Watch the full webinar

The complete conversation between 3Pillar and Forrester offers far more depth – including examples of real-world implementation and predictions for what’s next in AI-native product development.

Recent posts

Building a New Testing Mindset for AI-Powered Web Apps

The technology landscape is undergoing a profound transformation. For decades, businesses have relied on traditional web-based software to enhance user experiences and streamline operations. Today, a new wave of innovation is redefining how applications are built, powered by the rise of AI-driven development.

However, as leaders adopt AI, a key challenge has emerged: ensuring its quality, trust, and reliability. Unlike traditional systems with clear requirements and predictable outputs, AI introduces complexity and unpredictability, making Quality Assurance (QA) both more challenging and more critical. Business decision-makers must now rethink their QA strategy and investments to safeguard reputation, reduce risk, and unlock the full potential of intelligent solutions.

If your organization is investing in AI capabilities, understanding this quality challenge isn’t just a technical concern, it’s a business necessity that could determine the success or failure of your AI initiatives. In this blog, we’ll explore how AI-driven development is reshaping QA — and what organizations can do to ensure quality keeps pace with innovation.

Why traditional testing falls short

Let’s take a practical example. Imagine an Interview Agent built on top of a large language model (LLM) using OpenAI API. Its job is to screen candidates, ask context-relevant questions, and summarize responses. Sounds powerful, but here’s where traditional testing challenges emerge:

Non-deterministic outputs

Unlike a rules-based form, the AI agent might phrase the same question differently each time. This variability makes it impossible to write a single “pass/fail” test script.

Dynamic learning models

Updating the model or fine-tuning with new data can change behavior overnight. Yesterday’s green test might fail today.

Contextual accuracy

An answer can be grammatically correct yet factually misleading. Testing must consider not just whether the system responds, but whether it responds appropriately.

Ethical and compliance risks

AI systems can accidentally produce biased or non-compliant outputs. Testing must expand beyond functionality to include fairness, transparency, and safety.

Clearly, a new approach is needed.

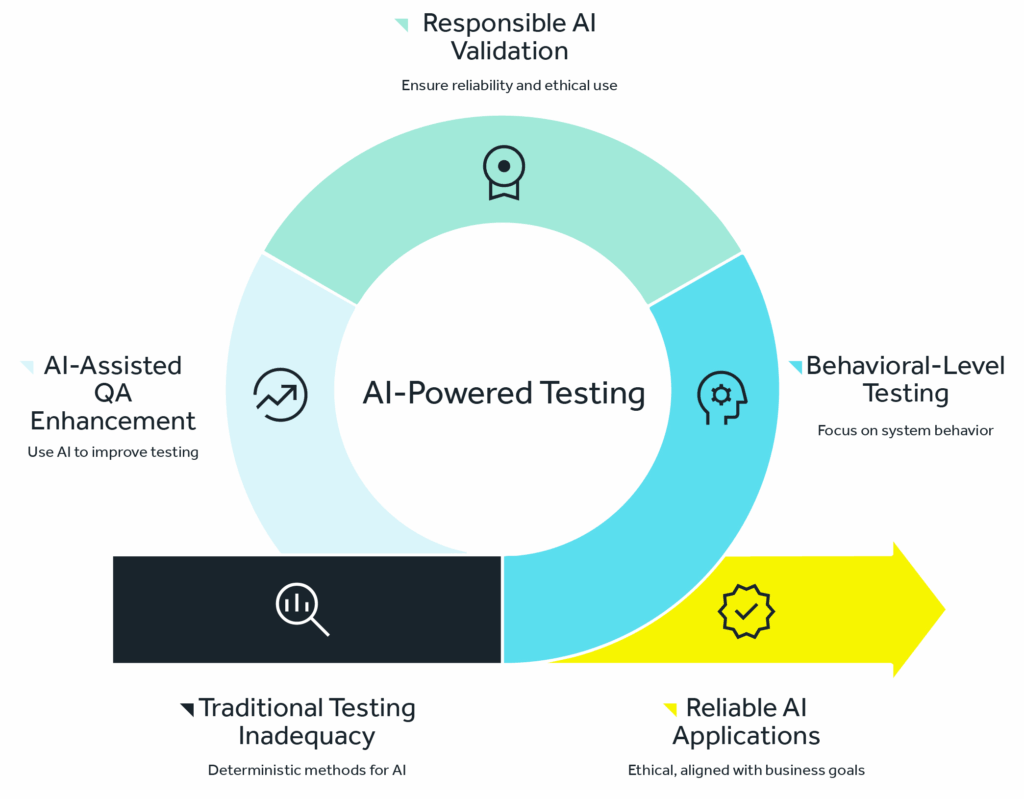

AI-powered testing

So, what does a modern approach to testing look like? We call it the AI-powered test, a fresh approach that redefines quality assurance for intelligent systems. Instead of force-fitting traditional, deterministic testing methods onto non-deterministic AI models, businesses need a flexible, risk-aware, and AI-assisted framework.

At its core, AI-powered testing means:

- Testing at the behavioral level, not just the functional level.

- Shifting the question from “Does it work?” to “Does it work responsibly, consistently, and at scale?”

- Using AI itself as a tool to enhance QA, not just as a subject to be tested.

This approach ensures that organizations not only validate whether AI applications function, but also whether they are reliable, ethical, and aligned with business goals.

Pillars of AI-powered testing

To make this shift practical, we recommend you plan your AI QA strategy around the following key pillars:

1. Scenario-based validation

Instead of expecting identical outputs, testers validate whether responses are acceptable across a wide range of real-world scenarios. For example, does the Interview Agent always ask contextually relevant questions, regardless of candidate background or job description?

2. AI evaluation through flexibility

AI systems should be judged on quality ranges rather than rigid outputs. Think of it as setting “guardrails” instead of a single endpoint. Does the AI stay within acceptable tone, accuracy, and intent even if the exact wording varies?

3. Continuous monitoring and drift detection

Since AI models evolve, testing can’t be a one time activity. Organizations must invest in continuous monitoring to detect shifts in accuracy, fairness, or compliance. Just as cybersecurity requires constant vigilance, so too does AI assurance.

4. Human judgment

Automation is powerful, but human judgment remains essential. QA teams should include domain experts who can review edge cases and make subjective assessments that machines can’t. For business leaders, this means budgeting not only for automation tools but also for skilled oversight.

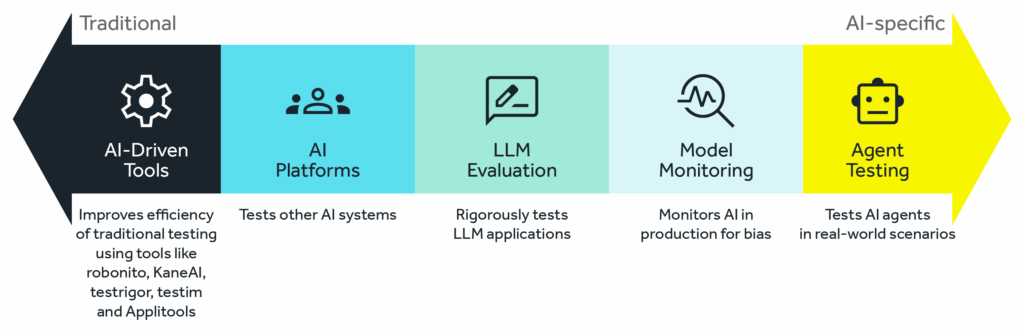

The future is moving from ‘AI for testing’ to ‘testing for AI’

AI is reshaping every part of the technology ecosystem, and software testing is no exception. We have AI-driven test automation tools like robonito, KaneAI, testRigor, testim, loadmill and Applitools. These are powerful allies that use AI to make traditional testing faster and more efficient. They can write test scripts from plain English, self-heal when the user interface changes, and intelligently identify visual bugs.These tools are excellent for improving the efficiency of testing the traditional applications.

But the real frontier is “AI platforms designed to test other AIs.” This is where the future lies. Think of these as “AI test agents,” specialized AI systems built to audit, challenge, and validate other AI. This emerging space is transforming how we think about quality assurance in the age of intelligent systems.

Key directions in “testing for AI”

- LLM evaluation platforms: New platforms are being developed to rigorously test applications powered by LLMs. For example , an Interview Agent can generate thousands of diverse, adversarial prompts to check for robustness, test for toxic or biased outputs, and compare the model’s responses against a predefined knowledge base to check for factual accuracy/hallucinations.

- Model monitoring & bias detection tools: Companies like Fiddler AI and Arize AI provide platforms that monitor your AI in production. They act as a continuous QA system, flagging data drift (when real-world data starts to look different from training data) and detecting in real-time if the model’s outputs are becoming biased or skewed.

- Testing for AI: There are many companies working on AI agent testing and agent-to-agent testing tools. For example, LambdaTest recently launched a beta version of its agent-to-agent testing platform — a unified environment for testing AI agents, including chatbots and voice assistants, across real-world scenarios to ensure accuracy, reliability, efficiency, and performance.

Why this matters for business leaders

From a C-suite perspective, investing in AI-powered testing isn’t just a technical decision, it’s a business imperative. Here’s why:

- Customer trust

A chatbot that provides incorrect medical advice or a hiring tool that shows bias can damage brand reputation overnight. Quality isn’t just about uptime anymore it’s about ethical, reliable experiences.

- Regulators are watching

AI regulation is tightening worldwide. Whether it’s GDPR, the EU AI Act, or emerging US frameworks, organizations will be held accountable for how their AI behaves. Testing for compliance should be part of your risk management strategy.

- Cost of failure

With AI embedded in core business processes, errors don’t just affect a single user, they can cascade across markets and stakeholders. Proactive QA is far cheaper than reactive damage control.

- Competitive advantage

Companies that can assure reliable, responsible AI will differentiate themselves. Just as “secure by design” became a competitive market in software, “trustworthy AI” will become a business differentiator.

Building your AI QA roadmap

So, how should an executive get started? Here’s a phased approach we recommend to clients:

Phase 1: Assess current gaps

Map where AI is currently embedded in your systems. Identify areas where quality risks could impact customers, compliance, or brand reputation.

Phase 2: Redefine QA metrics

Move beyond pass/fail. Introduce new metrics such as accuracy ranges, bias detection, explainability scores, and response relevance.

Phase 3: Invest in AI-powered tools

Adopt platforms that can automate scenario generation, inconsistency detection, and continuous monitoring. Look for solutions that scale with your AI adoption.

Phase 4: Build cross-functional oversight

Build a governance model that includes compliance, legal, and business leaders alongside IT. Quality must reflect business priorities, not just technical checklists.

Phase 5: Establish continuous governance

Treat AI QA as an ongoing discipline, not a project phase. Regularly review model performance, monitor for drift, and update guardrails as the business evolves.

Final thought

The era of AI-driven applications is here and it’s accelerating. But with innovation comes responsibility. Traditional QA approaches built for deterministic systems are no longer sufficient. By adopting an AI-powered testing strategy, organizations can ensure their AI systems are not only functional but also ethical, reliable, and aligned with business goals.

The message for leaders is clear: if you want to harness AI as a competitive advantage, you must also invest in the processes that make it trustworthy. Modern QA is no longer just about preventing bugs, it’s about protecting your brand, your customers, and securing your organization’s future in an AI-first world.

Ensure your AI delivers value you can trust. Connect with our team to define a scalable, responsible AI QA strategy.

Recent posts

The Human Behind the Machine: Why Synthetic Audiences Can’t Replace Authentic User Interviews

The Human Behind the Machine: Why Synthetic Audiences Can’t Replace Authentic User Interviews

The Human Behind the Machine: Why Synthetic Audiences Can’t Replace Authentic User Interviews

The Human Behind the Machine: Why Synthetic Audiences Can’t Replace Authentic User Interviews

The Human Behind the Machine: Why Synthetic Audiences Can’t Replace Authentic User Interviews

The Human Behind the Machine: Why Synthetic Audiences Can’t Replace Authentic User Interviews