Building a New Testing Mindset for AI-Powered Web Apps

The technology landscape is undergoing a profound transformation. For decades, businesses have relied on traditional web-based software to enhance user experiences and streamline operations. Today, a new wave of innovation is redefining how applications are built, powered by the rise of AI-driven development.

However, as leaders adopt AI, a key challenge has emerged: ensuring its quality, trust, and reliability. Unlike traditional systems with clear requirements and predictable outputs, AI introduces complexity and unpredictability, making Quality Assurance (QA) both more challenging and more critical. Business decision-makers must now rethink their QA strategy and investments to safeguard reputation, reduce risk, and unlock the full potential of intelligent solutions.

If your organization is investing in AI capabilities, understanding this quality challenge isn’t just a technical concern, it’s a business necessity that could determine the success or failure of your AI initiatives. In this blog, we’ll explore how AI-driven development is reshaping QA — and what organizations can do to ensure quality keeps pace with innovation.

Why traditional testing falls short

Let’s take a practical example. Imagine an Interview Agent built on top of a large language model (LLM) using OpenAI API. Its job is to screen candidates, ask context-relevant questions, and summarize responses. Sounds powerful, but here’s where traditional testing challenges emerge:

Non-deterministic outputs

Unlike a rules-based form, the AI agent might phrase the same question differently each time. This variability makes it impossible to write a single “pass/fail” test script.

Dynamic learning models

Updating the model or fine-tuning with new data can change behavior overnight. Yesterday’s green test might fail today.

Contextual accuracy

An answer can be grammatically correct yet factually misleading. Testing must consider not just whether the system responds, but whether it responds appropriately.

Ethical and compliance risks

AI systems can accidentally produce biased or non-compliant outputs. Testing must expand beyond functionality to include fairness, transparency, and safety.

Clearly, a new approach is needed.

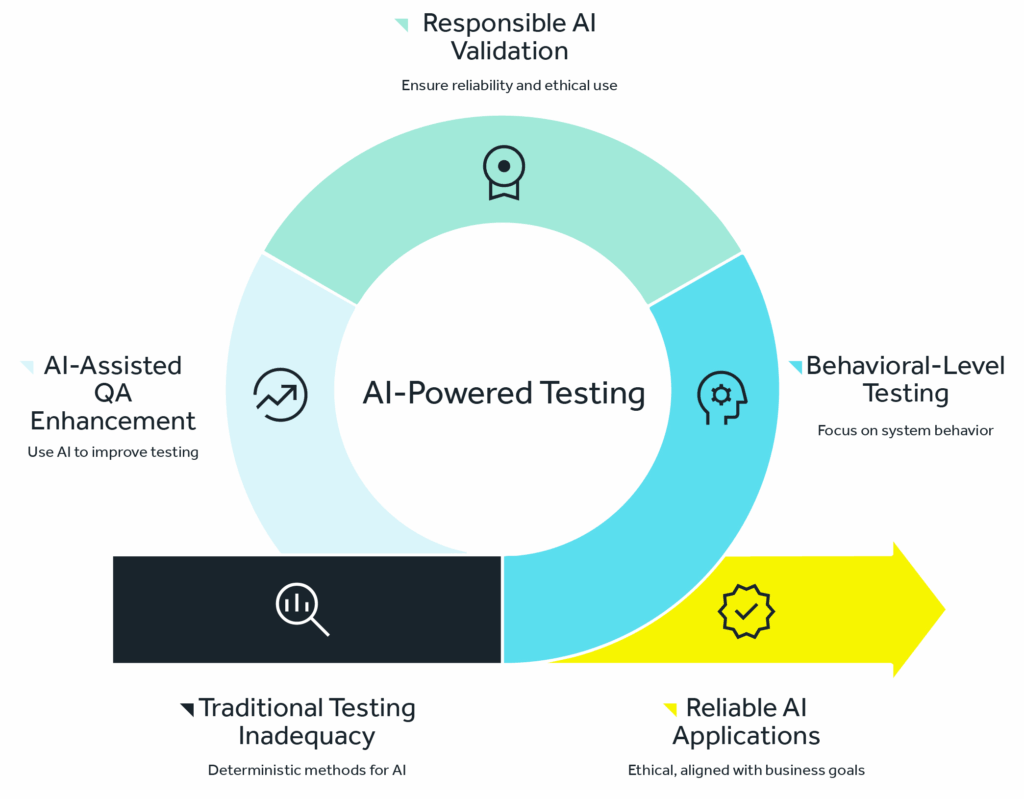

AI-powered testing

So, what does a modern approach to testing look like? We call it the AI-powered test, a fresh approach that redefines quality assurance for intelligent systems. Instead of force-fitting traditional, deterministic testing methods onto non-deterministic AI models, businesses need a flexible, risk-aware, and AI-assisted framework.

At its core, AI-powered testing means:

- Testing at the behavioral level, not just the functional level.

- Shifting the question from “Does it work?” to “Does it work responsibly, consistently, and at scale?”

- Using AI itself as a tool to enhance QA, not just as a subject to be tested.

This approach ensures that organizations not only validate whether AI applications function, but also whether they are reliable, ethical, and aligned with business goals.

Pillars of AI-powered testing

To make this shift practical, we recommend you plan your AI QA strategy around the following key pillars:

1. Scenario-based validation

Instead of expecting identical outputs, testers validate whether responses are acceptable across a wide range of real-world scenarios. For example, does the Interview Agent always ask contextually relevant questions, regardless of candidate background or job description?

2. AI evaluation through flexibility

AI systems should be judged on quality ranges rather than rigid outputs. Think of it as setting “guardrails” instead of a single endpoint. Does the AI stay within acceptable tone, accuracy, and intent even if the exact wording varies?

3. Continuous monitoring and drift detection

Since AI models evolve, testing can’t be a one time activity. Organizations must invest in continuous monitoring to detect shifts in accuracy, fairness, or compliance. Just as cybersecurity requires constant vigilance, so too does AI assurance.

4. Human judgment

Automation is powerful, but human judgment remains essential. QA teams should include domain experts who can review edge cases and make subjective assessments that machines can’t. For business leaders, this means budgeting not only for automation tools but also for skilled oversight.

The future is moving from ‘AI for testing’ to ‘testing for AI’

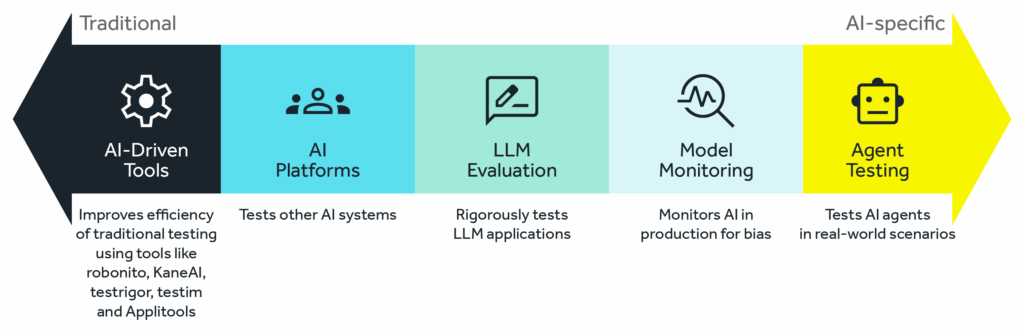

AI is reshaping every part of the technology ecosystem, and software testing is no exception. We have AI-driven test automation tools like robonito, KaneAI, testRigor, testim, loadmill and Applitools. These are powerful allies that use AI to make traditional testing faster and more efficient. They can write test scripts from plain English, self-heal when the user interface changes, and intelligently identify visual bugs.These tools are excellent for improving the efficiency of testing the traditional applications.

But the real frontier is “AI platforms designed to test other AIs.” This is where the future lies. Think of these as “AI test agents,” specialized AI systems built to audit, challenge, and validate other AI. This emerging space is transforming how we think about quality assurance in the age of intelligent systems.

Key directions in “testing for AI”

- LLM evaluation platforms: New platforms are being developed to rigorously test applications powered by LLMs. For example , an Interview Agent can generate thousands of diverse, adversarial prompts to check for robustness, test for toxic or biased outputs, and compare the model’s responses against a predefined knowledge base to check for factual accuracy/hallucinations.

- Model monitoring & bias detection tools: Companies like Fiddler AI and Arize AI provide platforms that monitor your AI in production. They act as a continuous QA system, flagging data drift (when real-world data starts to look different from training data) and detecting in real-time if the model’s outputs are becoming biased or skewed.

- Testing for AI: There are many companies working on AI agent testing and agent-to-agent testing tools. For example, LambdaTest recently launched a beta version of its agent-to-agent testing platform — a unified environment for testing AI agents, including chatbots and voice assistants, across real-world scenarios to ensure accuracy, reliability, efficiency, and performance.

Why this matters for business leaders

From a C-suite perspective, investing in AI-powered testing isn’t just a technical decision, it’s a business imperative. Here’s why:

- Customer trust

A chatbot that provides incorrect medical advice or a hiring tool that shows bias can damage brand reputation overnight. Quality isn’t just about uptime anymore it’s about ethical, reliable experiences.

- Regulators are watching

AI regulation is tightening worldwide. Whether it’s GDPR, the EU AI Act, or emerging US frameworks, organizations will be held accountable for how their AI behaves. Testing for compliance should be part of your risk management strategy.

- Cost of failure

With AI embedded in core business processes, errors don’t just affect a single user, they can cascade across markets and stakeholders. Proactive QA is far cheaper than reactive damage control.

- Competitive advantage

Companies that can assure reliable, responsible AI will differentiate themselves. Just as “secure by design” became a competitive market in software, “trustworthy AI” will become a business differentiator.

Building your AI QA roadmap

So, how should an executive get started? Here’s a phased approach we recommend to clients:

Phase 1: Assess current gaps

Map where AI is currently embedded in your systems. Identify areas where quality risks could impact customers, compliance, or brand reputation.

Phase 2: Redefine QA metrics

Move beyond pass/fail. Introduce new metrics such as accuracy ranges, bias detection, explainability scores, and response relevance.

Phase 3: Invest in AI-powered tools

Adopt platforms that can automate scenario generation, inconsistency detection, and continuous monitoring. Look for solutions that scale with your AI adoption.

Phase 4: Build cross-functional oversight

Build a governance model that includes compliance, legal, and business leaders alongside IT. Quality must reflect business priorities, not just technical checklists.

Phase 5: Establish continuous governance

Treat AI QA as an ongoing discipline, not a project phase. Regularly review model performance, monitor for drift, and update guardrails as the business evolves.

Final thought

The era of AI-driven applications is here and it’s accelerating. But with innovation comes responsibility. Traditional QA approaches built for deterministic systems are no longer sufficient. By adopting an AI-powered testing strategy, organizations can ensure their AI systems are not only functional but also ethical, reliable, and aligned with business goals.

The message for leaders is clear: if you want to harness AI as a competitive advantage, you must also invest in the processes that make it trustworthy. Modern QA is no longer just about preventing bugs, it’s about protecting your brand, your customers, and securing your organization’s future in an AI-first world.

Ensure your AI delivers value you can trust. Connect with our team to define a scalable, responsible AI QA strategy.